max a'*x / b'*x

w.r.t x,

s.t. x>=0, 1'*x=1

Here a and b are given vectors, " ' " denotes the vector

transposition, x is our search variable which is a vector, 1 is also a

vector above. It means the element of x should sum up to 1.Any thoughts?

Thanks a lot!

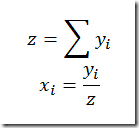

Sometimes. In slightly different notation, your model looks like:

We need to think about the denominator becoming zero. If we allow any b(i) this can easily happen. One possible way to make the problem well defined is to consider b(i)>0. (Alternatively all b(i)<0 will do). So let’s assume all b(i)>0.

We need to think about the denominator becoming zero. If we allow any b(i) this can easily happen. One possible way to make the problem well defined is to consider b(i)>0. (Alternatively all b(i)<0 will do). So let’s assume all b(i)>0.

First of all, this problem is not that difficult to solve for a general NLP solver. Good old MINOS is very quick on this even for large instances (e.g. 10k variables). The Hessian becomes big then, so solvers requiring second derivatives are in a disadvantage here. Note: there is a reformulation possible that reduces the size of the Hessian: write the objective as constraint of the form varobj*sum(i, b(i)*x(i))=sum(i,a(i)*x(i)).

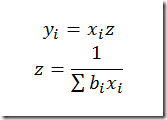

However, there is a reformulation possible into an LP. When we apply the transformation:

After solving we can recover the x variables through:

After solving we can recover the x variables through:

For more info about this transformation see section 4.3.2 in http://www.stanford.edu/~boyd/cvxbook/bv_cvxbook.pdf or section 2.10.2 in http://www.dashoptimization.com/home/downloads/book/booka4.pdf. I simplified things a little bit by using that x(i) sums to 1. The disadvantage of this transformation for more complex models is that we really need to change the complete model.

For more info about this transformation see section 4.3.2 in http://www.stanford.edu/~boyd/cvxbook/bv_cvxbook.pdf or section 2.10.2 in http://www.dashoptimization.com/home/downloads/book/booka4.pdf. I simplified things a little bit by using that x(i) sums to 1. The disadvantage of this transformation for more complex models is that we really need to change the complete model.

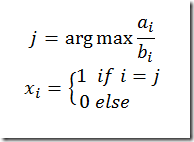

Alternatively we may exploit the structure of the model and just use the simple algorithm:

So we don’t even need linear programming here, just find the maximum of n numbers. The above is illustrated in the following GAMS model:

So we don’t even need linear programming here, just find the maximum of n numbers. The above is illustrated in the following GAMS model:

*------------------------------------------------------

* random data, b(i)>0

*------------------------------------------------------

set i /i1*i10000/;

parameter a(i), b(i);

a(i) = uniform(-10,10);

b(i) = uniform(1,10);

*------------------------------------------------------

* nonlinear model

*------------------------------------------------------

positive variable x(i) 'nlp solution';

variable obj1;

equations e_obj1, sum1;

e_obj1.. obj1 =e= sum(i, a(i)*x(i))/sum(i, b(i)*x(i));

sum1.. sum(i, x(i)) =e= 1;

* initial point

x.l(i) = 1/card(i);

model m1 /e_obj1,sum1/;

solve m1 maximizing obj1 using nlp;

*------------------------------------------------------

* transformed linear model

*------------------------------------------------------

positive variable y(i);

variable obj2;

equations e_obj2, sum2;

e_obj2.. obj2 =e= sum(i, a(i)*y(i));

sum2.. sum(i, b(i)*y(i)) =e= 1;

model m2 /e_obj2,sum2/;

solve m2 maximizing obj2 using lp;

*------------------------------------------------------

* recover x

*------------------------------------------------------

parameter pz,px(i) 'recovered solution';

pz = sum(i,y.l(i));

px(i) = y.l(i)/pz;

display x.l,px;

*------------------------------------------------------

* alternative algorithm

*------------------------------------------------------

parameter c(i); c(i) = a(i)/b(i);

scalar max; max = smax(i, c(i));

display max;

set jmax(i); jmax(i)$(c(i)=max) = yes;

abort$(card(jmax)<>1) "want a single max a(i)/b(i)";

parameter px2(i) 'alternative solution';

px2(jmax) = 1;

display px2;

No comments:

Post a Comment