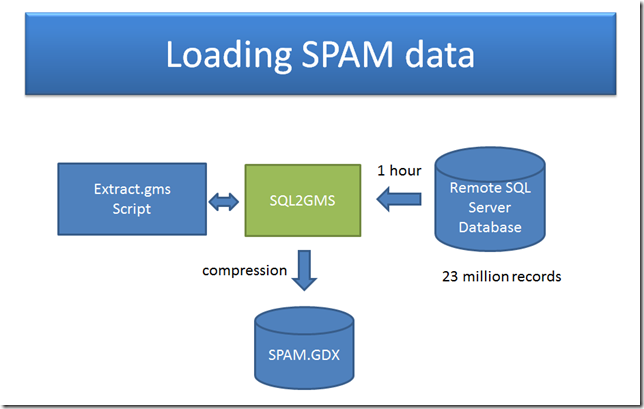

For a project we convert data from a humongous table with 23 million records stored in a remote SQL Server database (global spatial grid with data on agricultural production and land use from the Spatial Production Allocation Model). It takes about 1 hour to run a query and populate a GDX file. Probably the performance is related to limited bandwidth. This is truly an example of “big data”. In a subsequent model we load the large GDX file into GAMS for further processing. This loading is much faster: about 4 seconds. It takes about 750 MB RAM to load the data in GAMS.

For these type of applications the use of GDX file as a high-performance staging “database” is very convenient. It would be a nightmare to load this data directly from the SQL Server database into GAMS: every run would start with a 1 hour startup time.

Compression works quite well on this gdx file (see also: http://yetanothermathprogrammingconsultant.blogspot.com/2010/04/gamsgdx-compression.html). Here are the file sizes:

| Compression | File size in bytes |

| Uncompressed | 303,114,020 |

| Compressed | 77,879,752 |

Users are more and more throwing real large data at me. Besides the SQL Server database above, I was also delivered an 1.2 GB DBF file. DBF files are still popular with GIS applications. This file could be imported into a compressed GDX file with the following size reduction (also partly because we dropped some redundant columns but mainly because GDX stores strings more efficiently):

| File format | Size |

| dbf | 1.2 GB |

| compressed GDX | 62 MB |

No comments:

Post a Comment